A/B Testing to Optimize Click Through Rate

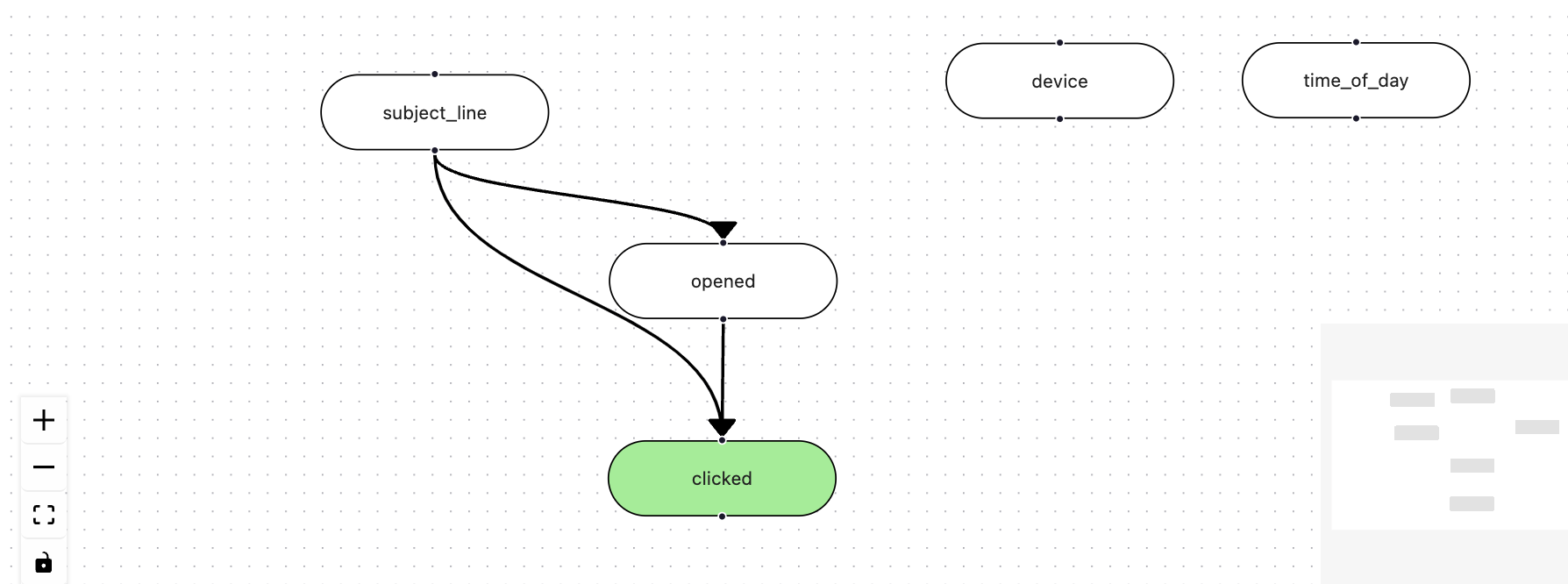

Marketers often test variations of subject lines in order to optimize click through rate (CTR). Many factors other than the subject line, however, impact the metric. For example, the time of day, the day of week, audience segment, and other factors could all impact CTR.

Using Daggy, we can easily get measure how much the specific subject line drove CTR as well as how it the subject line performed under various cuts of the data.

Data

We have the following data:

| Data Point | Description |

|---|---|

| Subject Line | Two variants: A and B, randomly assigned throughout the day and across segments |

| Audience Segment | Three segments |

| Day of Week | - |

| Device | Tablet, desktop, mobile |

| Time of Day | Morning, evening, afternoon, night |

| Opened | Whether the user opened the email |

| Clicked | Whether the user followed the link in the email |

We care about measuring the impact of the Subject Line on CTR. CTR is defined as if the user clicked the link given that they opened the email, since they couldn't click the link if they didn't open the email.

Building a Model

We can start with a simple model that just looks at the subject line on the click through rate.

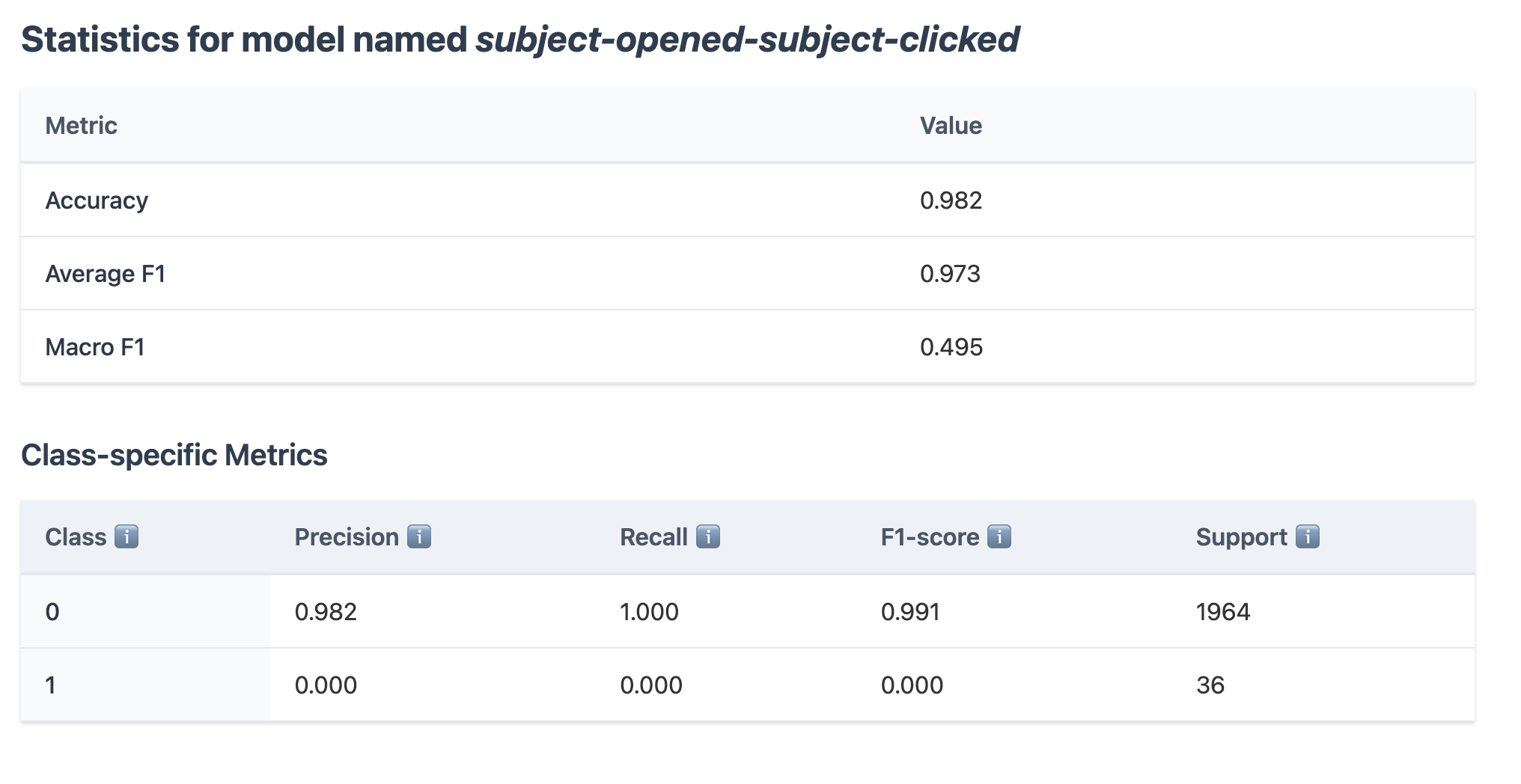

We named this model subject-opened-subject-clicked and trained it. After training completed, we can see the following metrics on a randomly held out version piece of our dataset. These are roughly what we would expect to see if we used this particular model in the future to predict click through rate.

Notice the row of zeros where class equals 1. This suggests that the model predicted a CTR of 0 for every row in the data. This is unsurprising due to CTR being so low -- only people only clicked on the links on 36 of the two thousand emails originally sent.

We could include our out of sample prediction by adjusting for all of the other variables -- doing this gets our Macro F1 to 0.76 -- but since we know the subject line was randomly assigned, we will still be able to estimate the causal impact of subject line A compared to subject line B.

Measuring Impact

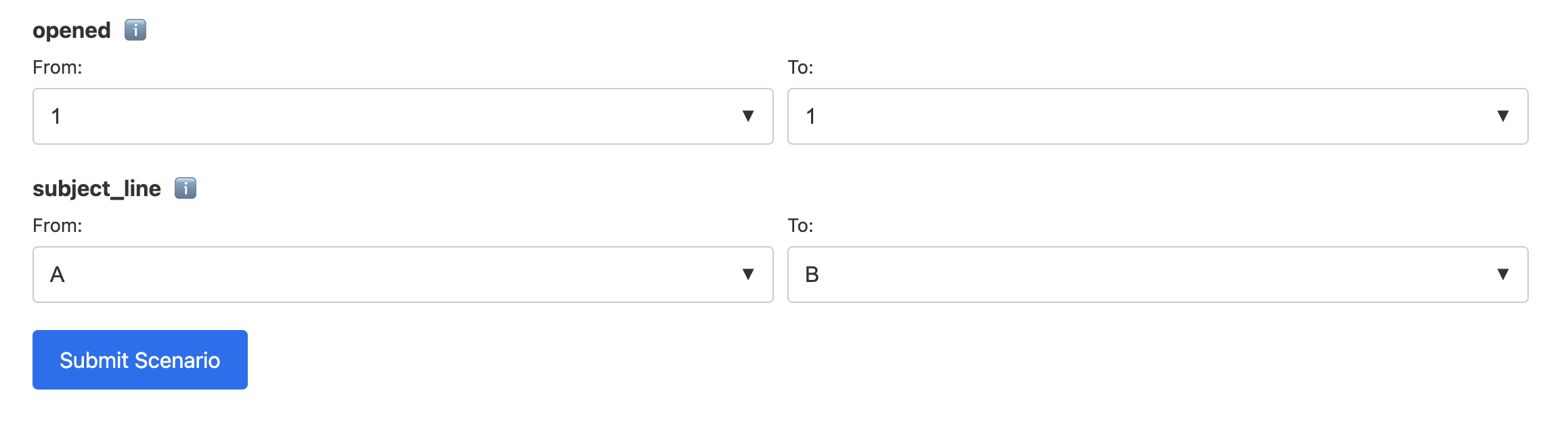

We then scroll down and set the target class, clicked, to equal 1. Since CTR is clicked conditional on opened we also set opened to equal 1 in both the before and after scenarios. Finally, to measure the impact of the two subject lines, we set From to A and To to B. For concreteness, the image below shows these settings in the UI.

We can now click Submit Scenario to estimate the impact of each subject line. After running this we will get results of the scenario as well as plots.

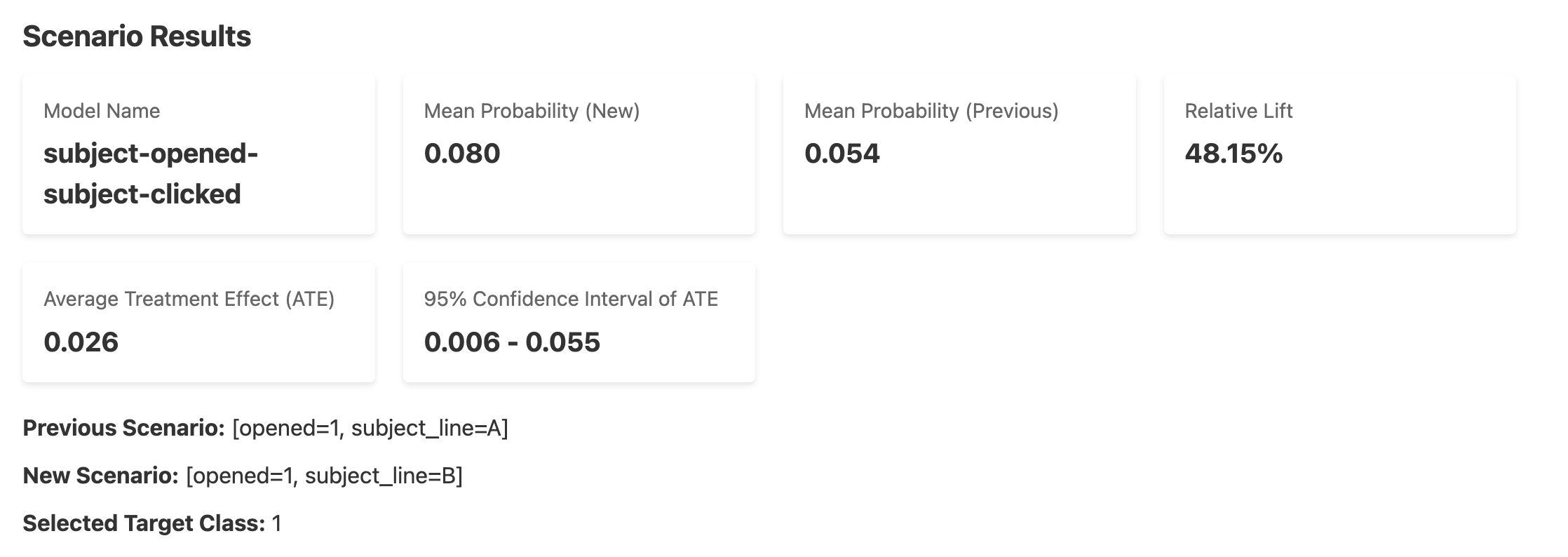

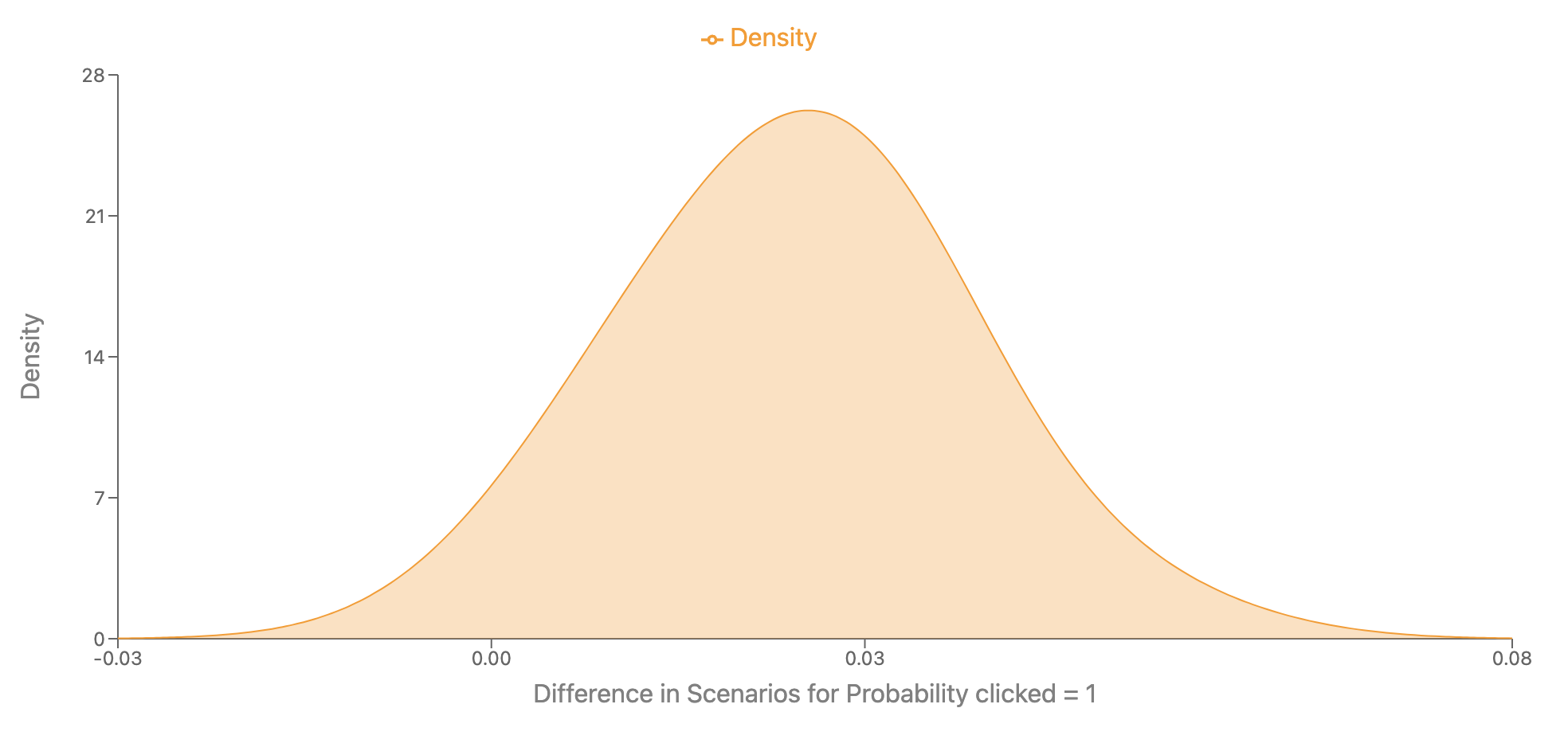

These metrics show that the new scenario, which used subject line B had a 48.15 percent relative lift over the scenario that used subject line A. The mean probability a user would click after opening an email with subject line A was 0.054, while that probability jumped to 0.08 for subject line B. The difference is the ATE, which is 0.026. By looking at the difference in these scenarios, we can see that the lower and upper values in the 95 percent confidence interval are on the same side of zero, which means this result is statistically signficant at the 95 percent threshold.

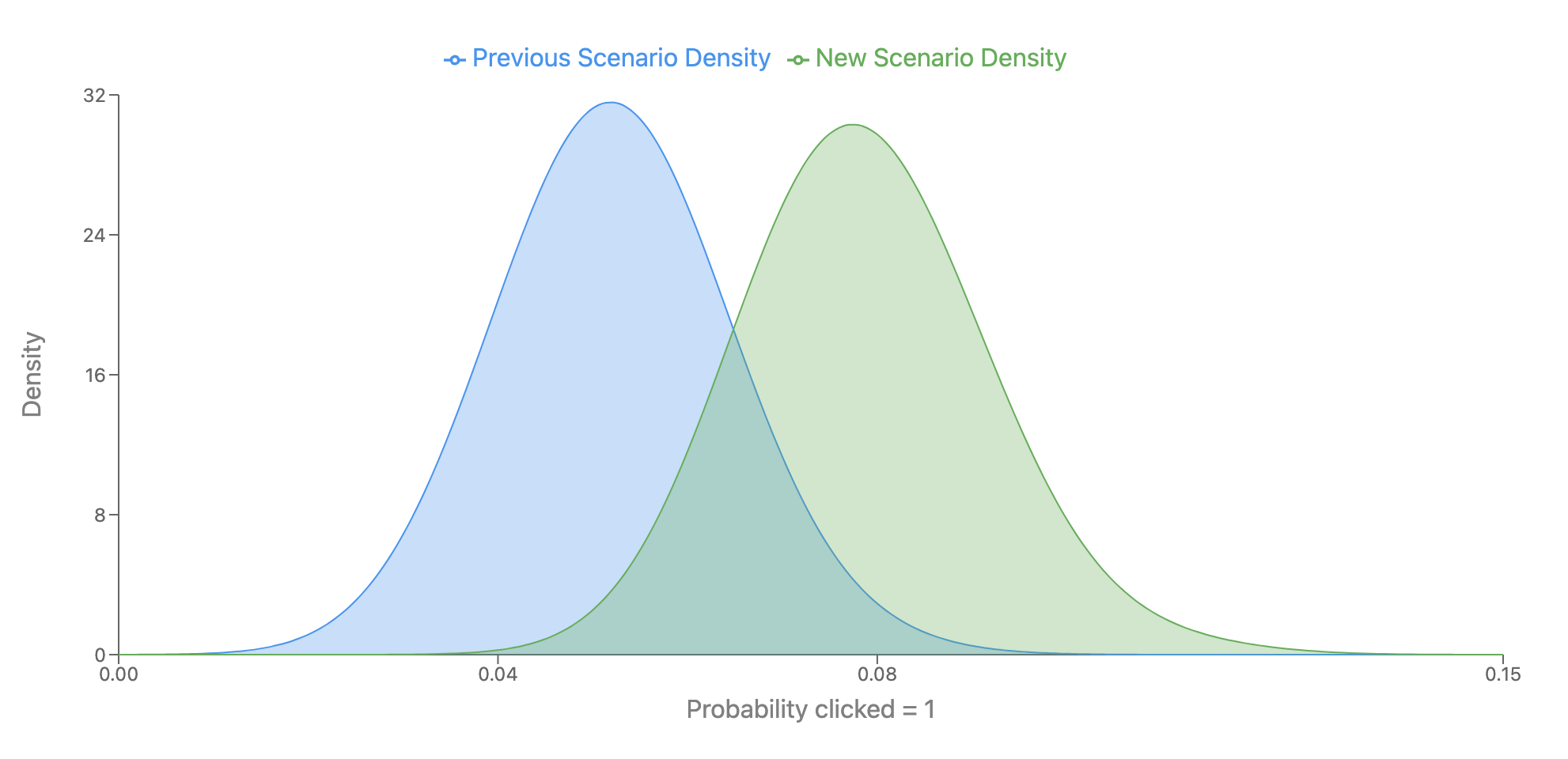

We can look at the distributions in the two plots below.

The first plot shows the probability distributions of the CTR under Subject Line A (Previous Scenario) and Subject Line B (New Scenario). We can see that the new scenario is mostly greater than the old scenario, but not always. The peaks of these distributions are equal to the mean probabilities in the results above.

The plot below shows the difference between these two scenarios above. We can see that most of the density -- just over 97.5 percent of it -- is greater than 0. The 95 percent confidence intervals above are taken from this distribution.

Conclusion

Overall, the analysis above shows that Subject Line B is much more effective than Subject Line A. The relative lift from A to B is 48.5 percent, which is very large. Not only are these meaningful for the business but they are also statistically significant. The data here strongly suggest we should use Subject Line B.